Adobe's AI problem

In Short: Adobe's Firefly, promoted as an ethical AI image generator trained on proprietary and public domain images, has also been training on AI-generated images from competitors.

What’s going on?

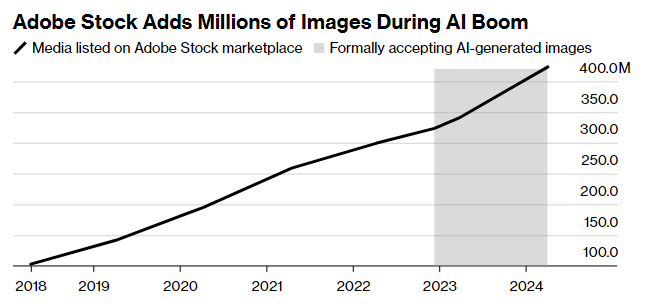

Adobe set itself apart in the digital creativity market by launching Firefly, an AI tool presented as a paragon of responsible AI usage. The tool was advertised as using only Adobe Stock and public domain images, avoiding the contentious practices of competitors who scrape the internet for training data.

However, disclosures in Adobe’s online communities revealed that about 5% of Firefly’s training dataset included images generated by other AI services, like Midjourney and OpenAI’s Dall-E. This revelation has sparked internal debates and external criticism regarding Adobe’s commitment to transparency and ethical standards in AI development.

Why does it matter?

The inclusion of competitor-generated images in Firefly's training data raises significant ethical and transparency issues. It challenges Adobe’s claims of superior ethical practices and could undermine trust among users and contributors.

This scenario highlights a common dilemma in AI development: balancing rapid technological advancement with ethical integrity. Adobe’s situation illustrates the broader industry challenge of maintaining transparency and ethical conduct, crucial for sustaining user trust and supporting the responsible evolution of AI technologies.

⚖️ How does this impact Law Firms?

Intellectual Property (IP) Law:

- IP Rights Management and Licensing: IP lawyers will be increasingly called upon to negotiate and draft licensing agreements for the use of AI-generated images, ensuring that such creations do not infringe on existing copyrights or trademarks. They will guide clients, including technology firms and content creators, on securing the necessary rights for the AI-generated content and on structuring agreements that protect their IP assets in the evolving digital landscape.

- Dispute Resolution and Litigation: As the lines between AI-generated content and human-created content blur, IP lawyers will find themselves handling more disputes over copyright ownership and infringement. This includes representing clients in litigation where AI has inadvertently created content that mirrors copyrighted materials, advising on the nuances of "authorship" in the digital age, and ensuring proper representation in court to address these complex and emerging legal issues.

Technology and Data Security Law:

- Compliance Advisory on AI Development and Use: Technology lawyers will advise software companies and developers on compliance with UK and international regulations governing AI. This includes guidance on ethical AI use, data sourcing, and the implications of using AI-generated content. These lawyers will help ensure that AI tools like Adobe Firefly comply with legal standards, thus preventing regulatory backlash and fostering trust among users and contributors.

- Risk Management and Data Protection Impact Assessments: Given the sensitivity of data used in training AI systems, lawyers specializing in technology and data security will need to conduct thorough data protection impact assessments for companies. This ensures that all personal data used in training AI meets the strict standards set by laws like the GDPR. They will also provide ongoing advice on risk management strategies to mitigate potential legal issues arising from AI deployment.

Commercial Law: